MSNoise

master

MSNoise

master

-

Menu

- Installation

- Full Installation

- MySQL Server and Workbench

- Database Structure - Tables

- Building this documentation

- Using the development version

- Workflow

- Installer (initialize Project)

- MSNoise Admin (Web Interface)

- Populate Station Table

- Scan Archive

- New Jobs

- Compute Cross-Correlations

- Stack

- Compute MWCS

- Compute dt/t

- Plotting

- Customizing Plots

- Data Availability Plot

- Station Map

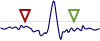

- Interferogram Plot

- CCF vs Time

- MWCS Plot

- Distance Plot

- dv/v Plot

- dt/t Plot

-

Page